RAC

12cR2 Installation Step by step on Oracle Linux 7.4

It’s described the installation of 12cR2

RAC installation (2 Node Cluster) and Oracle Database 12c Release 2 RAC on

Linux (Oracle Linux 7 64-bit) using asmlib.

Starting with

Oracle Grid Infrastructure 12c Release 2

(12.2), the installation media is replaced with a zip file for the Oracle Grid

Infrastructure installer. Run the installation wizard after extracting the zip

file into the target home path.

Note:

These installation

instructions assume you do not already have any Oracle software installed on

your system. If you have already installed Oracle ASMLIB, then you will not be

able to install Oracle ASM Filter Driver (Oracle ASMFD) until you uninstall

Oracle ASMLIB. You can use Oracle ASMLIB instead of Oracle ASMFD for managing

the disks used by Oracle ASM, but those instructions are not included in this

guide.

|

Name |

|

Public |

Private-1 |

Private-2 |

|

Server |

rac131.ora.com |

192.168.1.131 |

10.10.10.131 |

20.20.20.131 |

|

rac132.ora.com |

192.168.1.132 |

10.10.10.132 |

20.20.20.132 |

|

|

SCAN |

rac-scan.ora.com |

192.168.1.41 |

192.168.1.42 |

192.168.1.43 |

|

rac131-vip.ora.com |

192.168.1.166 |

|

||

|

rac132-vip.ora.com |

192.168.1.167 |

|||

|

DNS |

dns101.ora.com |

192.168.1.101 |

|

|

|

DNS Internet resolve |

192.168.1.7 |

|||

12c RAC New Feature for Clusterware.

Some new Feature introduced in 12c Release 1 and 2

·

Oracle Flex ASM - New feature reduce

per-node overhead of using ASM instance. Instances can use remote node ASM for

any planned/unplanned downtime.

·

Flex ASM supports up to 511 diskgroups

and larger LUNs up to 32PB

·

ASM Disk Scrubbing - ASM comes with disk

scrubbing feature so that logical corruptions can be discovered and ASM can

automatically correct this in normal or high redundancy diskgroups

e.g.

alter diskgroup DATA scrub power low;

alter diskgroup DATA scrub disk

datadisk_002 repair power low;

alter diskgroup DATA scrub power low;

alter diskgroup DATA scrub power auto;

==> Auto default

alter diskgroup DATA scrub power high

alter diskgroup DATA scrub power max;

·

Grid Infrastructure Rolling Migration support for one-off’s

When applying a one-off patch to the ASM

instance, the databases that it is serving can be pointed to use a different

ASM instance.

·

RAW/Block Storage Devices

Oracle Database 12c and Oracle

Clusterware 12c, no longer support raw storage devices. The files must be moved

to Oracle ASM before upgrading to Oracle Clusterware 12c.

·

Oracle ASM Disk Resync & Rebalance

enhancements.

·

Deprecation of single-letter SRVCTL

command-line interface (CLI) options

All SRVCTL commands have been enhanced

to accept full-word options instead of the single-letter options. All new

SRVCTL command options added in this release support full-word options, only,

and do not have single-letter equivalents.

We have an option for simulate cluster commands without executing it.

srvctl stop database -d ccbprod -eval;

crsctl eval modify resource ccb_pool -attr

value;

DB should managed by policy managed service not Administer Manager

[oracle@rac131 ~]$ srvctl stop database -d

ccbprod -eval

PRKO-2712 : Administrator-managed database

ccbprod is not supported with -eval option

$

srvctl { add | start | stop | modify | relocate } database ... -eval

$

srvctl { add | start | stop | modify | relocate } service ... -eval

$

srvctl { add | modify | remove } srvpool ... -eval

$

srvctl relocate server ... –eval

·

Deinstallation tool is integrated with

the database installation media. You can run the deinstallation tool using the

runInstaller command with the -deinstall and -home options from the base

directory of the database, client or grid infrastructure installation media.

Example :

runInstaller -deinstall -home complete path

of Oracle home [-silent] [-checkonly] [-local] [-paramfile complete path of

input parameter property file] [-params name1=value

name2=value . . .] [-o complete path of

directory for saving files] [-help]

$ cd /directory_path/runInstaller

$ ./runInstaller -deinstall -home

/u01/app/oracle/product/12.1.0/dbhome_1/

·

IPv6 Support - Oracle RAC 12c now

supports IPv6 for Client connectivity, Interconnect is still on IPv4.

IPv6 address 2001:0DB8:0:0::200C:417A

IPv4 address 192.168.1.131

Oracle Database 12c Release 2 and IPv6

In Oracle Database 12 Release 2, Oracle

provides full IPv6 support for all components and features. Specifically as

below:

1.

IPv6 on the private network in an Oracle

Clusterware configuration is now supported on all platforms

2.

IPv6 client connectivity over public

networks to Oracle RAC and Clusterware running on Windows is now supported.

3.

ASM and ONS-based FAN notifications for

single-instance and RAC databases running on Windows is supported

·

Oracle 12c Release 2 (12.2) has added an

extra group called SYSRAC, this group is not supported in Oracle 12.1

# 12.2 only.

groupadd -g racdba

Its allow a database user to connect using these admin privileges, you

need to grant the relevant admin privilege to them. You can't grant sysrac to a

database user.

.

oraenv (+ASM1)

sqlplus

/ as sysasm

SQL>

create user dbaocm_asm identified by amit123;

GRANT

sysasm TO dbaocm_asm;

sqlplus

dbaocm_asm as sysasm

.

oraenv (ccbprod1)

sqlplus

/ as sysdba

grant

connect to dbaocm identified by amit123;

grant

connect to dbaocm_oper identified by amit123;

grant

connect to dbocm_bkp identified by amit123;

grant

connect to dbaocm_dg identified by amit123;

grant

connect to dbaocm_km identified by amit123;

GRANT

sysdba TO dbaocm;

GRANT

sysoper TO dbaocm_oper;

GRANT

sysbackup TO dbocm_bkp;

GRANT

sysdg TO dbaocm_dg;

GRANT

syskm TO dbaocm_km;

sqlplus

dbaocm as sysdba

sqlplus

dbaocm_oper as sysoper

sqlplus

dbocm_bkp as sysbackup

sqlplus

dbaocm_dg as sysdg

sqlplus

dbaocm_km as syskm

The users will then be able to connect using admin privileges.

$

sqlplus my_dba_user as sysdba

$

sqlplus my_oper_user as sysoper

$

sqlplus my_asm_user as sysasm

$

sqlplus my_backup_user as sysbackup

$

sqlplus my_dg_user as sysdg

$

sqlplus my_km_user as syskm

12.2 only

$

sqlplus / as sysrac

SQL>

select USERNAME,SYSDBA,SYSOPER,SYSASM,SYSBACKUP,SYSDG,SYSKM,AUTHENTICATION_TYPE

from v$pwfile_users ;

USERNAME SYSDB SYSOP SYSAS SYSBA SYSDG SYSKM AUTHENTI

---------------

----- ----- ----- ----- ----- ----- --------

SYS TRUE TRUE

FALSE FALSE FALSE FALSE PASSWORD

SYSDG FALSE FALSE FALSE FALSE TRUE FALSE PASSWORD

SYSBACKUP FALSE FALSE FALSE TRUE FALSE FALSE PASSWORD

SYSKM FALSE FALSE FALSE FALSE FALSE

TRUE PASSWORD

DBAOCM TRUE

FALSE FALSE FALSE FALSE FALSE PASSWORD

DBAOCM_OPER FALSE TRUE

FALSE FALSE FALSE FALSE PASSWORD

DBOCM_BKP FALSE FALSE FALSE TRUE FALSE FALSE PASSWORD

DBAOCM_DG FALSE FALSE FALSE FALSE TRUE FALSE PASSWORD

DBAOCM_KM FALSE FALSE FALSE FALSE FALSE TRUE PASSWORD

·

Transparent Application Failover (TAF)

is extended to DML transactions failover (insert/update/delete) which had been

announced by Oracle 12.2 will support DML for

Transparent Application Failover (TAF)

instructs Oracle Net to fail over a failed connection to a different listener.

This enables the user to continue to work using the new connection as if the

original connection had never failed. TAF need more to explore in Oracle.

·

Running root.sh is not part of Universal

Oracle installer (OUI) across nodes in RAC but we have option to run manually,

where we can explor and debuging runtime execution error.

·

Per Subnet multiple SCAN - RAC 12c,

per-Subnet multiple Single Client Access Name can be configured per cluster.

Before implementing additional SCANs, the OS provisioning of new network

interfaces or new VLAN Tagging has to be completed.

·

Shared Password file in ASM

A single password file can now be stored

in the ASM diskgroup and can be shared by all nodes. No need to have individual

copies for each instance.

·

ASM Multiple Diskgroup Rebalance and

Disk Resync Enhancements

Resync Power limit – Allows multiple

diskgroups to be resynced concurrently.

Disk Resync Checkpoint – Faster recovery

from instance failures.

·

"ghctl" command for patching.

·

Grid Infrastructure software optionally

creates Grid Infrastructure Management Repository (mgmtdb) database, which is

available ONLY on one node, to assists in management operations.

Setup 12c

RAC

Setup Oracle Linux 7.4 x64 installation with 3 NIC Cards.

Server should be minimum configuration of 50GB Local Storage + 12+ GB of RAM

and swap as per OS guidance.

Firewall and SELinux should be disabled or open respective

port and permission to configure.

Oracle

Installation Prerequisites

Perform either the Automatic Setup or

the Manual Setup to complete the basic prerequisites. The Additional Setup is

required for all installations.

[oracle@rac131 ~]$ more /etc/resolv.conf

# Generated by

NetworkManager

search ora.com

nameserver

192.168.1.101 ###(DNS)

nameserver 192.168.1.7 ###(Internet Proxy Server)

# cd /etc/yum.repos.d

wget

http://yum.oracle.com/public-yum-ol7.repo

If you plan

to use the "oracle-database-server-12cR2-preinstall" package to

perform all your prerequisite setup, issue the following command.

# yum install –y oracle-database-server-12cR2-preinstall

Additional rpm required to install on both nodes

From Public Yum

yum install binutils -y

yum install compat-libstdc++-33 -yyum install compat-libstdc++-33.i686 -yyum install gcc -yyum install gcc-c++ -yyum install glibc -yyum install glibc.i686 -yyum install glibc-devel -yyum install glibc-devel.i686 -yyum install ksh -yyum install libgcc -yyum install libgcc.i686 -yyum install libstdc++ -yyum install libstdc++.i686 -yyum install libstdc++-devel -yyum install libstdc++-devel.i686 -yyum install libaio -yyum install libaio.i686 -yyum install libaio-devel -yyum install libaio-devel.i686 -yyum install libXext -yyum install libXext.i686 -yyum install libXtst -yyum install libXtst.i686 -yyum install libX11 -yyum install libX11.i686 -yyum install libXau -yyum install libXau.i686 -yyum install libxcb -yyum install libxcb.i686 -yyum install libXi -yyum install libXi.i686 -yyum install make -yyum install sysstat -yyum install unixODBC -yyum install unixODBC-devel -yyum install zlib-devel -yyum install zlib-devel.i686 –y

Install ASMLib and support package https://www.oracle.com/linux/downloads/linux-asmlib-v7-downloads.htmlyum install –y oracleasm-supportyum install –y kmod-oracleasm Download asmlib from https://download.oracle.com/otn_software/asmlib/oracleasmlib-2.0.12-1.el7.x86_64.rpm yum install –y oracleasmlib-2.0.12-1.el7.x86_64 Create

Shared Storage Disk for CRS and Data

Shutdown both machine and set the environment path of Virtual Box on you command prompt and execute below. This will create the storage disk and automatically attached in your both RAC VM. My VM Name is: RAC131-12.2-7.4RAC132-12.2-7.4 --------------------------------------------------------------VBoxManage createhd --filename CRS01.vdi --size 8192 --format VDI --variant FixedVBoxManage createhd --filename CRS02.vdi --size 8192 --format VDI --variant FixedVBoxManage createhd --filename CRS03.vdi --size 8192 --format VDI --variant FixedVBoxManage createhd --filename CRS04.vdi --size 8192 --format VDI --variant FixedVBoxManage createhd --filename CRS05.vdi --size 8192 --format VDI --variant Fixed VBoxManage createhd --filename datadisk1.vdi --size 10240 --format VDI --variant FixedVBoxManage createhd --filename datadisk2.vdi --size 10240 --format VDI --variant FixedVBoxManage createhd --filename datadisk3.vdi --size 10240 --format VDI --variant FixedVBoxManage createhd --filename datadisk4.vdi --size 10240 --format VDI --variant Fixed VBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 1 --device 0 --type hdd --medium CRS01.vdi --mtype shareableVBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 2 --device 0 --type hdd --medium CRS02.vdi --mtype shareableVBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 3 --device 0 --type hdd --medium CRS03.vdi --mtype shareableVBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 4 --device 0 --type hdd --medium CRS04.vdi --mtype shareableVBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 5 --device 0 --type hdd --medium CRS05.vdi --mtype shareable VBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 6 --device 0 --type hdd --medium datadisk1.vdi --mtype shareableVBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 7 --device 0 --type hdd --medium datadisk2.vdi --mtype shareableVBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 8 --device 0 --type hdd --medium datadisk3.vdi --mtype shareableVBoxManage storageattach RAC131-12.2-7.4 --storagectl "SATA" --port 9 --device 0 --type hdd --medium datadisk4.vdi --mtype shareable VBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 1 --device 0 --type hdd --medium CRS01.vdi --mtype shareableVBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 2 --device 0 --type hdd --medium CRS02.vdi --mtype shareableVBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 3 --device 0 --type hdd --medium CRS03.vdi --mtype shareableVBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 4 --device 0 --type hdd --medium CRS04.vdi --mtype shareableVBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 5 --device 0 --type hdd --medium CRS05.vdi --mtype shareable VBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 6 --device 0 --type hdd --medium datadisk1.vdi --mtype shareableVBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 7 --device 0 --type hdd --medium datadisk2.vdi --mtype shareableVBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 8 --device 0 --type hdd --medium datadisk3.vdi --mtype shareableVBoxManage storageattach RAC132-12.2-7.4 --storagectl "SATA" --port 9 --device 0 --type hdd --medium datadisk4.vdi --mtype shareable If you have

the Linux firewall enabled, you will need to disable

# systemctl stop firewalld# systemctl disable firewalldMake sure

NTP (Chrony on OL7/RHEL7) is enabled.

# systemctl enable chronyd# systemctl restart chronydmkdir -p /u01/app/12.2.0.1/GRIDmkdir -p /u01/app/oracle/product/12.2.0.1/DB_1chown -R oracle:oinstall /u01chmod -R 775 /u01/Format the

disk shared disk you created on any one of the nodes using below in one go.

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdb

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdc

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdd

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sde

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdf

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdg

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdh

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdi

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdj

echo -e "o\nn\np\n1\n\n\nw"

| fdisk /dev/sdk

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdl

echo -e

"o\nn\np\n1\n\n\nw" | fdisk /dev/sdm

[oracle@rac131 ~]$

sudo fdisk -l | grep B

Disk /dev/sda: 107.4

GB, 107374182400 bytes, 209715200 sectors

Device Boot Start

End Blocks

Id System

Disk /dev/sdb: 8589

MB, 8589934592 bytes, 16777216 sectors

Device Boot Start End Blocks

Id System

Disk /dev/sdc: 8589

MB, 8589934592 bytes, 16777216 sectors

Device Boot Start End

Blocks Id

System

Disk /dev/sdd: 8589

MB, 8589934592 bytes, 16777216 sectors

Device Boot Start End Blocks

Id System

Disk /dev/sde: 8589

MB, 8589934592 bytes, 16777216 sectors

Device Boot Start End Blocks

Id System

Disk /dev/sdg: 10.7

GB, 10737418240 bytes, 20971520 sectors

Device Boot Start End Blocks

Id System

Disk /dev/sdf: 8589

MB, 8589934592 bytes, 16777216 sectors

Device Boot Start End Blocks

Id System

Disk /dev/sdh: 10.7

GB, 10737418240 bytes, 20971520 sectors

Device Boot Start End Blocks

Id System

Disk /dev/sdj: 10.7

GB, 10737418240 bytes, 20971520 sectors

Device Boot Start End Blocks

Id System

Disk /dev/sdi: 10.7

GB, 10737418240 bytes, 20971520 sectors

Device Boot Start End Blocks

Id System

Disk

/dev/mapper/ol_rac131-swap: 17.2 GB, 17179869184 bytes, 33554432 sectors

[root@rac131

~]# /sbin/oracleasm configure -i

Configuring the Oracle

ASM library driver.

This will configure

the on-boot properties of the Oracle ASM library

driver. The following questions will determine

whether the driver is

loaded on boot and

what permissions it will have. The

current values

will be shown in brackets

('[]'). Hitting <ENTER> without

typing an

answer will keep that

current value. Ctrl-C will abort.

Default user to own

the driver interface [oracle]:

Default group to own

the driver interface [dba]:

Start Oracle ASM

library driver on boot (y/n) [y]:

Scan for Oracle ASM

disks on boot (y/n) [y]:

Writing Oracle ASM

library driver configuration: done

Loading asm

modules without restarting

[root@rac131

~]# /sbin/oracleasm init

[root@rac131

~]# /usr/sbin/oracleasm status

Checking if ASM is

loaded: yes

Checking if

/dev/oracleasm is mounted: yes

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk CRS_DISK01 /dev/sdb1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk CRS_DISK02 /dev/sdc1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk CRS_DISK03 /dev/sdd1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk CRS_DISK04 /dev/sde1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk CRS_DISK05 /dev/sdf1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk DATA_DISK01 /dev/sdg1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk DATA_DISK02 /dev/sdh1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk DATA_DISK03 /dev/sdi1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm createdisk DATA_DISK04 /dev/sdj1

Writing disk header: done

Instantiating disk: done

[root@rac131 ~]#

/usr/sbin/oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

[root@rac131 ~]#

/usr/sbin/oracleasm listdisks

CRS_DISK01

CRS_DISK02

CRS_DISK03

CRS_DISK04

CRS_DISK05

DATA_DISK01

DATA_DISK02

DATA_DISK03

DATA_DISK04

Install the

Grid Infrastructure

Make sure both machines are started. The

Grid Infrastructure (GI) is now an image installation, so perform the following

on the first node rac131 as the "oracle" user.

Go to the

software directory where you download GI Software

[oracle@rac131 ~]$ cd

/ora_global_nfs/software/Oracle/database/12.2.0.1/12.2.0.1_OCT2020/GRID/

[oracle@rac131 GRID]$

ls

V840012-01.zip

Unzip the Gold Image to GRID HOME as

below

[oracle@rac131 GRID]$

unzip -d /u01/app/12.2.0.1/GRID V840012-01.zip

Archive: V840012-01.zip

creating: /u01/app/12.2.0.1/GRID/addnode/

inflating: /u01/app/12.2.0.1/GRID/addnode/addnode_oraparam.ini.sbs

inflating:

/u01/app/12.2.0.1/GRID/addnode/addnode.pl

inflating:

/u01/app/12.2.0.1/GRID/addnode/addnode.sh

inflating:

/u01/app/12.2.0.1/GRID/addnode/addnode_oraparam.ini

…

…

inflating: /u01/app/12.2.0.1/GRID/xdk/mesg/lsxtr.msb

inflating:

/u01/app/12.2.0.1/GRID/xdk/mesg/lpxel.msb

inflating:

/u01/app/12.2.0.1/GRID/xdk/mesg/jznpt.msb

inflating:

/u01/app/12.2.0.1/GRID/xdk/mesg/lsxja.msb

finishing deferred

symbolic links:

/u01/app/12.2.0.1/GRID/bin/lbuilder -> ../nls/lbuilder/lbuilder

/u01/app/12.2.0.1/GRID/javavm/admin/libjtcjt.so ->

../../javavm/jdk/jdk8/admin/libjtcjt.so

/u01/app/12.2.0.1/GRID/javavm/admin/lfclasses.bin ->

../../javavm/jdk/jdk8/admin/lfclasses.bin

/u01/app/12.2.0.1/GRID/javavm/admin/classes.bin

-> ../../javavm/jdk/jdk8/admin/classes.bin

/u01/app/12.2.0.1/GRID/javavm/admin/cbp.jar

-> ../../javavm/jdk/jdk8/admin/cbp.jar

/u01/app/12.2.0.1/GRID/javavm/lib/sunjce_provider.jar ->

../../javavm/jdk/jdk8/lib/sunjce_provider.jar

/u01/app/12.2.0.1/GRID/javavm/lib/jce.jar

-> ../../javavm/jdk/jdk8/lib/jce.jar

/u01/app/12.2.0.1/GRID/javavm/lib/security/US_export_policy.jar ->

../../../javavm/jdk/jdk8/lib/security/US_export_policy.jar

/u01/app/12.2.0.1/GRID/javavm/lib/security/java.security ->

../../../javavm/jdk/jdk8/lib/security/java.security

/u01/app/12.2.0.1/GRID/javavm/lib/security/local_policy.jar ->

../../../javavm/jdk/jdk8/lib/security/local_policy.jar

/u01/app/12.2.0.1/GRID/javavm/lib/security/cacerts ->

../../../javavm/jdk/jdk8/lib/security/cacerts

/u01/app/12.2.0.1/GRID/jdk/bin/ControlPanel

-> jcontrol

/u01/app/12.2.0.1/GRID/jdk/jre/bin/ControlPanel -> jcontrol

/u01/app/12.2.0.1/GRID/jdk/jre/lib/amd64/server/libjsig.so ->

../libjsig.so

/u01/app/12.2.0.1/GRID/lib/libclntshcore.so

-> libclntshcore.so.12.1

/u01/app/12.2.0.1/GRID/lib/libjavavm12.a

-> ../javavm/jdk/jdk8/lib/libjavavm12.a

/u01/app/12.2.0.1/GRID/lib/libodm12.so ->

libodmd12.so

/u01/app/12.2.0.1/GRID/lib/libagtsh.so ->

libagtsh.so.1.0

/u01/app/12.2.0.1/GRID/lib/libclntsh.so ->

libclntsh.so.12.1

/u01/app/12.2.0.1/GRID/lib/libocci.so ->

libocci.so.12.1

Since there

is bug with this 12.2.0.1 image which fall server need to reboot during running

root.sh in cluster setup. To avoid

server reboot we need to apply bug fix by applying OneOffs Patch to grid home

before continue to GI Installation.

==============================

>

ACFS-9154: Loading ‘oracleoks.ko’ driver.

>modprobe:

ERROR: could not insert ‘oracleoks’: Unknown symbol in module, or unknown

parameter (see dmesg)

>

ACFS-9109: oracleoks.ko driver failed to load.

>

ACFS-9178: Return code = USM_FAIL

>

ACFS-9177: Return from ‘ldusmdrvs’

>

ACFS-9428: Failed to load ADVM/ACFS drivers. A system reboot is recommended.

==============================

root.sh fails

with following error and workaround is given below:

2020/10/18 07:20:44 CLSRSC-400: A system

reboot is required to continue installing.

The command

'/u01/app/12.2.0.1/GRID/perl/bin/perl -I/u01/app/12.2.0.1/GRID/perl/lib

-I/u01/app/12.2.0.1/GRID/crs/install

/u01/app/12.2.0.1/GRID/crs/install/rootcrs.pl ' execution failed

Workaround

– Solution:

Apply OneOffs patch as below or reboot the

server once root.sh failed as above error.

Patch

25078431 – is for 7.3 RHEL/OEL Version

Patch

26247490 – is for 7.4 RHEL/OEL Version

Here in my case I applied OneOffs patch 26247490 on extracted Gold Image to continue the installation.

Configure

VNC Setup on your Linux box and execute below:

./gridSetup.sh -applyOneOffs

/SOFT/26247490/26247490/26247490

It will apply the patch after that grid installation will continue.

Verify the

OneOffs Patch logs applied..

[oracle@rac131 opatch]$ more opatch2020-10-19_03-26-00AM_1.log [Oct 19, 2020 3:26:01 AM] OUI exists, the oraclehome is OUI based.[Oct 19, 2020 3:26:01 AM] OUI exists, the oraclehome is OUI based.[Oct 19, 2020 3:26:01 AM] OPatch invoked as follows: 'lsinventory -oh /u01/app/12.2.0.1/GRID -local -invPtrLoc /u01/app/12.2.0.1/GRID/oraInst.loc '[Oct 19, 2020 3:26:01 AM] OUI-67077: Oracle Home : /u01/app/12.2.0.1/GRID Central Inventory : /u01/app/oraInventory from : /u01/app/12.2.0.1/GRID/oraInst.loc OPatch version : 12.2.0.1.6 OUI version : 12.2.0.1.4 OUI location : /u01/app/12.2.0.1/GRID/oui Log file location : /u01/app/12.2.0.1/GRID/cfgtoollogs/opatch/opatch2020-10-19_03-26-00AM_1.log[Oct 19, 2020 3:26:01 AM] Patch history file: /u01/app/12.2.0.1/GRID/cfgtoollogs/opatch/opatch_history.txt[Oct 19, 2020 3:26:01 AM] Starting LsInventorySession at Mon Oct 19 03:26:01 EDT 2020[Oct 19, 2020 3:26:02 AM] [OPSR-MEMORY-1] : after installInventory.getAllCompsVect() call : 39 (MB)[Oct 19, 2020 3:26:02 AM] [OPSR-MEMORY-2] : after loading rawOneOffList39 (MB)[Oct 19, 2020 3:26:02 AM] Lsinventory Output file location : /u01/app/12.2.0.1/GRID/cfgtoollogs/opatch/lsinv/lsinventory2020-10-19_03-26-00AM.txt[Oct 19, 2020 3:26:02 AM] --------------------------------------------------------------------------------[Oct 19, 2020 3:26:02 AM] [OPSR-MEMORY-3] : before loading cooked one off : 39 (MB)[Oct 19, 2020 3:26:03 AM] [OPSR-MEMORY-4] : after filling cookedOneOffs and when inventory is loaded.. : 47 (MB)[Oct 19, 2020 3:26:03 AM] Local Machine Information:: Hostname: rac131.ora.com ARU platform id: 226 ARU platform description:: Linux x86-64[Oct 19, 2020 3:26:03 AM] Installed Top-level Products (1):[Oct 19, 2020 3:26:03 AM] Oracle Grid Infrastructure 12c 12.2.0.1.0[Oct 19, 2020 3:26:03 AM] There are 1 products installed in this Oracle Home.[Oct 19, 2020 3:26:03 AM] Interim patches (1) :[Oct 19, 2020 3:26:03 AM] Patch 26247490 : applied on Sat Oct 17 08:39:09 EDT 2020 Unique Patch ID: 22158925 Patch description: "ACFS Interim patch for 26247490" Created on 5 Dec 2018, 00:27:58 hrs PST8PDT Bugs fixed: 21129279, 22591010, 23152694, 23181299, 23625427, 24285969, 24346777 24652931, 24661214, 24674652, 24679041, 24690973, 24964969, 25078431 25098392, 25375360, 25381434, 25480028, 25491831, 25526314, 25549648 25560948, 25726952, 25764672, 25826440, 25966987, 26051087, 26085458 26247490, 26275740, 26396215, 26625494, 26667459, 26730740, 26740931 26759355, 26844019, 26871374, 26912733, 26987877, 26990202, 27012440 27027294, 27065091, 27081038, 27163313, 27223171, 27230645, 27333978 27339654, 27573409[Oct 19, 2020 3:26:03 AM] --------------------------------------------------------------------------------[Oct 19, 2020 3:26:03 AM] Finishing LsInventorySession at Mon Oct 19 03:26:03 EDT 2020 Click

on Next to proceed…

Select and Click on Next

Select and Click on Next

Add Scan Name and Click on Next

Select Public and Private interconnect

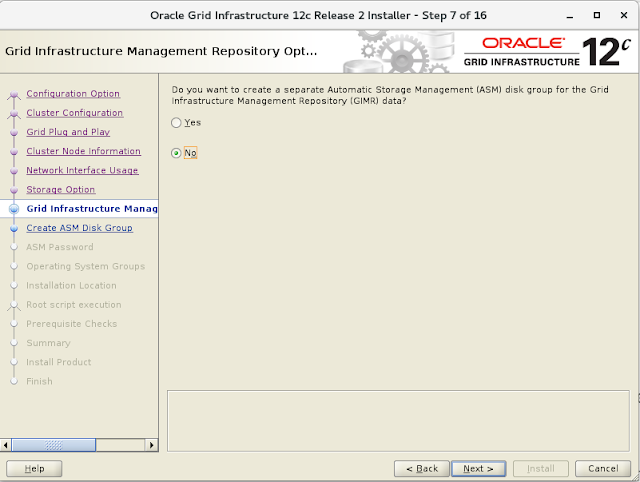

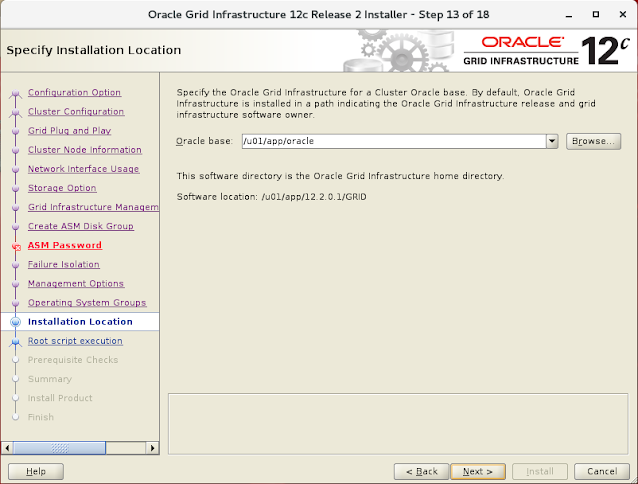

Select No for GIMR

I am not using automatically running root.sh script. I will run manually ..Click Next

Save the responsefile and Click Next

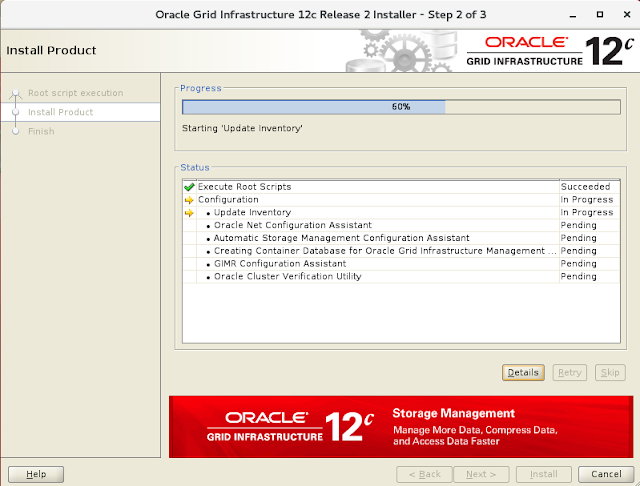

But during running root.sh My server got rebooted because of huge

utilized. So I added 1 more CPU to my both VM

and extended RAM from 10GB to 12+ GB after that executed root.sh and got

successfully. Since because of server reboot my OUI installer got closed so I

have executed runInstaller for ExecuteConfigtools using response file to resume

the installation from where it got disappeared..

[root@rac131 ~]# lscpuArchitecture: x86_64CPU op-mode(s): 32-bit, 64-bitByte Order: Little EndianCPU(s): 2On-line CPU(s) list: 0,1Thread(s) per core: 1Core(s) per socket: 2Socket(s): 1NUMA node(s): 1Vendor ID: GenuineIntelCPU family: 6Model: 60Model name: Intel(R) Core(TM) i7-4770K CPU @ 3.50GHzStepping: 3CPU MHz: 3491.911BogoMIPS: 6983.82Hypervisor vendor: Innotek GmbHVirtualization type: fullL1d cache: 32KL1i cache: 32KL2 cache: 256KL3 cache: 8192KNUMA node0 CPU(s): 0,1

Root.sh on

Node1

[oracle@rac131

~]$ sudo /u01/app/12.2.0.1/GRID/root.sh

Performing root user operation.

The following environment variables

are set as:

ORACLE_OWNER= oracle

ORACLE_HOME=

/u01/app/12.2.0.1/GRID

Enter the full pathname of the local

bin directory: [/usr/local/bin]:

The contents of "dbhome"

have not changed. No need to overwrite.

The contents of "oraenv"

have not changed. No need to overwrite.

The contents of "coraenv"

have not changed. No need to overwrite.

Entries will be added to the

/etc/oratab file as needed by

Database Configuration Assistant

when a database is created

Finished running generic part of

root script.

Now product-specific root actions

will be performed.

Relinking oracle with rac_on option

Using configuration parameter file:

/u01/app/12.2.0.1/GRID/crs/install/crsconfig_params

The log of current session can be

found at:

/u01/app/oracle/crsdata/rac131/crsconfig/rootcrs_rac131_2020-10-17_10-38-39AM.log

2020/10/17 10:39:05 CLSRSC-594:

Executing installation step 1 of 19: 'SetupTFA'.

2020/10/17 10:39:05 CLSRSC-4001:

Installing Oracle Trace File Analyzer (TFA) Collector.

2020/10/17 10:39:07 CLSRSC-4002:

Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2020/10/17 10:39:08 CLSRSC-594:

Executing installation step 2 of 19: 'ValidateEnv'.

2020/10/17 10:39:25 CLSRSC-363: User

ignored prerequisites during installation

2020/10/17 10:39:25 CLSRSC-594:

Executing installation step 3 of 19: 'CheckFirstNode'.

2020/10/17 10:39:38 CLSRSC-594:

Executing installation step 4 of 19: 'GenSiteGUIDs'.

2020/10/17 10:39:43 CLSRSC-594:

Executing installation step 5 of 19: 'SaveParamFile'.

2020/10/17 10:39:58 CLSRSC-594:

Executing installation step 6 of 19: 'SetupOSD'.

2020/10/17 10:40:00 CLSRSC-594:

Executing installation step 7 of 19: 'CheckCRSConfig'.

2020/10/17 10:40:05 CLSRSC-594:

Executing installation step 8 of 19: 'SetupLocalGPNP'.

2020/10/17 10:40:24 CLSRSC-594:

Executing installation step 9 of 19: 'ConfigOLR'.

2020/10/17 10:40:35 CLSRSC-594:

Executing installation step 10 of 19: 'ConfigCHMOS'.

2020/10/17 10:40:37 CLSRSC-594:

Executing installation step 11 of 19: 'CreateOHASD'.

2020/10/17 10:40:46 CLSRSC-594:

Executing installation step 12 of 19: 'ConfigOHASD'.

2020/10/17 10:40:58 CLSRSC-594:

Executing installation step 13 of 19: 'InstallAFD'.

2020/10/17 10:41:32 CLSRSC-594:

Executing installation step 14 of 19: 'InstallACFS'.

2020/10/17 10:41:55 CLSRSC-594:

Executing installation step 15 of 19: 'InstallKA'.

2020/10/17 10:42:29 CLSRSC-594:

Executing installation step 16 of 19: 'InitConfig'.

CRS-2791: Starting shutdown of

Oracle High Availability Services-managed resources on 'rac131'

CRS-2673: Attempting to stop

'ora.crsd' on 'rac131'

CRS-2790: Starting shutdown of

Cluster Ready Services-managed resources on server 'rac131'

CRS-2673: Attempting to stop

'ora.ons' on 'rac131'

CRS-2677: Stop of 'ora.ons' on

'rac131' succeeded

CRS-2673: Attempting to stop

'ora.net1.network' on 'rac131'

CRS-2677: Stop of 'ora.net1.network'

on 'rac131' succeeded

CRS-2792: Shutdown of Cluster Ready

Services-managed resources on 'rac131' has completed

CRS-2677: Stop of 'ora.crsd' on

'rac131' succeeded

CRS-2673: Attempting to stop

'ora.storage' on 'rac131'

CRS-2673: Attempting to stop

'ora.crf' on 'rac131'

CRS-2673: Attempting to stop

'ora.drivers.acfs' on 'rac131'

CRS-2673: Attempting to stop

'ora.gpnpd' on 'rac131'

CRS-2673: Attempting to stop 'ora.mdnsd'

on 'rac131'

CRS-2677: Stop of 'ora.drivers.acfs'

on 'rac131' succeeded

CRS-2677: Stop of 'ora.gpnpd' on

'rac131' succeeded

CRS-2677: Stop of 'ora.storage' on

'rac131' succeeded

CRS-2673: Attempting to stop

'ora.asm' on 'rac131'

CRS-2677: Stop of 'ora.mdnsd' on

'rac131' succeeded

CRS-2677: Stop of 'ora.crf' on

'rac131' succeeded

CRS-2677: Stop of 'ora.asm' on

'rac131' succeeded

CRS-2673: Attempting to stop

'ora.cluster_interconnect.haip' on 'rac131'

CRS-2677: Stop of

'ora.cluster_interconnect.haip' on 'rac131' succeeded

CRS-2673: Attempting to stop

'ora.ctssd' on 'rac131'

CRS-2673: Attempting to stop

'ora.evmd' on 'rac131'

CRS-2677: Stop of 'ora.ctssd' on

'rac131' succeeded

CRS-2677: Stop of 'ora.evmd' on

'rac131' succeeded

CRS-2673: Attempting to stop 'ora.cssd'

on 'rac131'

CRS-2677: Stop of 'ora.cssd' on

'rac131' succeeded

CRS-2673: Attempting to stop

'ora.gipcd' on 'rac131'

CRS-2677: Stop of 'ora.gipcd' on

'rac131' succeeded

CRS-2793: Shutdown of Oracle High

Availability Services-managed resources on 'rac131' has completed

CRS-4133: Oracle High Availability

Services has been stopped.

2020/10/17 10:42:59 CLSRSC-594:

Executing installation step 17 of 19: 'StartCluster'.

CRS-4123: Starting Oracle High

Availability Services-managed resources

CRS-2672: Attempting to start

'ora.mdnsd' on 'rac131'

CRS-2672: Attempting to start

'ora.evmd' on 'rac131'

CRS-2676: Start of 'ora.mdnsd' on

'rac131' succeeded

CRS-2676: Start of 'ora.evmd' on

'rac131' succeeded

CRS-2672: Attempting to start

'ora.gpnpd' on 'rac131'

CRS-2676: Start of 'ora.gpnpd' on

'rac131' succeeded

CRS-2672: Attempting to start

'ora.gipcd' on 'rac131'

CRS-2676: Start of 'ora.gipcd' on

'rac131' succeeded

CRS-2672: Attempting to start

'ora.cssdmonitor' on 'rac131'

CRS-2676: Start of 'ora.cssdmonitor'

on 'rac131' succeeded

CRS-2672: Attempting to start

'ora.cssd' on 'rac131'

CRS-2672: Attempting to start

'ora.diskmon' on 'rac131'

CRS-2676: Start of 'ora.diskmon' on

'rac131' succeeded

CRS-2676: Start of 'ora.cssd' on

'rac131' succeeded

CRS-2672: Attempting to start

'ora.cluster_interconnect.haip' on 'rac131'

CRS-2672: Attempting to start

'ora.ctssd' on 'rac131'

CRS-2676: Start of 'ora.ctssd' on

'rac131' succeeded

CRS-2676: Start of

'ora.cluster_interconnect.haip' on 'rac131' succeeded

CRS-2672: Attempting to start

'ora.asm' on 'rac131'

CRS-2676: Start of 'ora.asm' on

'rac131' succeeded

CRS-2672: Attempting to start

'ora.storage' on 'rac131'

CRS-2676: Start of 'ora.storage' on

'rac131' succeeded

CRS-2672: Attempting to start

'ora.crf' on 'rac131'

CRS-2676: Start of 'ora.crf' on

'rac131' succeeded

CRS-2672: Attempting to start

'ora.crsd' on 'rac131'

CRS-2676: Start of 'ora.crsd' on

'rac131' succeeded

CRS-6023: Starting Oracle Cluster

Ready Services-managed resources

CRS-6017: Processing resource

auto-start for servers: rac131

CRS-2672: Attempting to start

'ora.ons' on 'rac131'

CRS-2676: Start of 'ora.ons' on

'rac131' succeeded

CRS-6016: Resource auto-start has

completed for server rac131

CRS-6024: Completed start of Oracle

Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability

Services has been started.

2020/10/17 10:44:20 CLSRSC-343:

Successfully started Oracle Clusterware stack

2020/10/17 10:44:21 CLSRSC-594:

Executing installation step 18 of 19: 'ConfigNode'.

CRS-2672: Attempting to start 'ora.ASMNET1LSNR_ASM.lsnr'

on 'rac131'

CRS-2672: Attempting to start

'ora.ASMNET2LSNR_ASM.lsnr' on 'rac131'

CRS-2676: Start of

'ora.ASMNET2LSNR_ASM.lsnr' on 'rac131' succeeded

CRS-2676: Start of

'ora.ASMNET1LSNR_ASM.lsnr' on 'rac131' succeeded

CRS-2672: Attempting to start

'ora.asm' on 'rac131'

CRS-2676: Start of 'ora.asm' on

'rac131' succeeded

CRS-2672: Attempting to start

'ora.CRS_DG.dg' on 'rac131'

CRS-2676: Start of 'ora.CRS_DG.dg'

on 'rac131' succeeded

2020/10/17 10:51:26 CLSRSC-594:

Executing installation step 19 of 19: 'PostConfig'.

2020/10/17 10:54:32 CLSRSC-325: Configure Oracle Grid Infrastructure for a

Cluster ... succeeded

[oracle@rac131 ~]$

And same like for node2..

Since my server got rebooted so I will start the installer from where it got disappear.

To complete

the GI post installation on Clusters as per the DOC ID 1360798.1.

· Edited the

response file

(/u01/app/12.2.0.1/GRID/install/response/grid_2020-10-15_01-37-51PM.rsp) and

appended the password for below parameters:

Note: My password is here oracle

vi /u01/app/12.2.0.1/GRID/install/response/grid_2020-10-15_01-37-51PM.rsp

edit below and add password

oracle.install.asm.SYSASMPassword=oracle

oracle.install.asm.monitorPassword=oracle

Finally Grid Installation on cluster has

completed successfully. Need to validate

the services on both nodes.

[oracle@rac131

~]$ crsctl check cluster -all

**************************************************************

rac131:

CRS-4537:

Cluster Ready Services is online

CRS-4529:

Cluster Synchronization Services is online

CRS-4533:

Event Manager is online

**************************************************************

rac132:

CRS-4537:

Cluster Ready Services is online

CRS-4529:

Cluster Synchronization Services is online

CRS-4533:

Event Manager is online

Moving to 12cR2 DB installation this

cluster

Installing Database on 12cR2 cluster is

same as we used to do on earlier release.

Select

Create and Configure database …Click Next..

Select

Server Class and Click Next..

Select

RAC Datbase and Click Next..

Select

Administrator Managed Policy and Click

Next..

Select

both nodes and Click Next..

Select

Advance and Click Next..

Select

Enterprise Edition and Click Next..

Set

DB Home and Click Next..

Enter

the database name. I am not creating PDB

right now, will do later and Click Next..

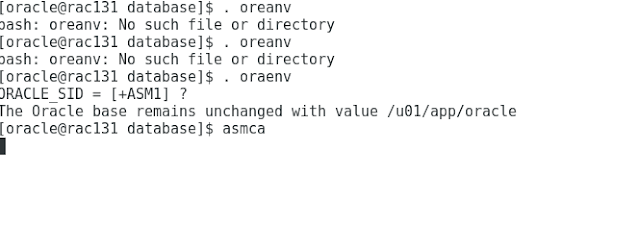

Set

SGA and DB Characterset and then open new terminal to open asmca in GUI

Mode, We can create ASM Data Disk manually.

But I am using ASMCA to create Data Disk Group…

Click

on Diskgroup in Left tree panel and Click Create to create DATA Diskgroup.

Select

available ASM Disk to create DG

Now Diskgroup has been created

..

Now move the DB installer screen and select DATA Diskgroup for Database datafile

and Redo logs.. I am using DATA DG for

datafile, redolog, tempfile and Archivelog.

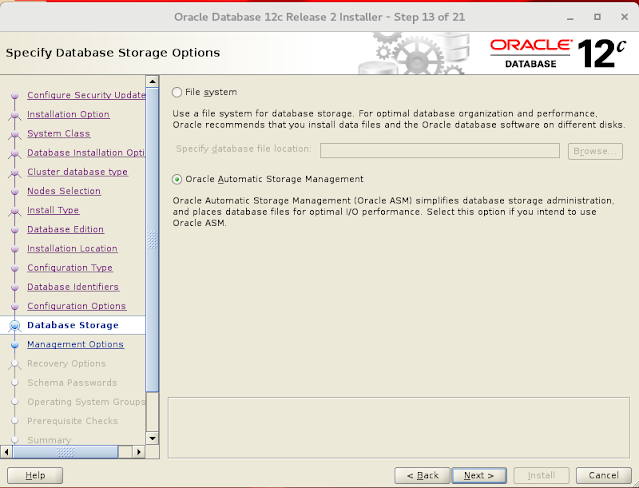

And select Oracle Automatic Storage Management

.. if

we have running OEM the we can register DB directly here in OEM. But I am skipping here. Will do later.

Click

Next

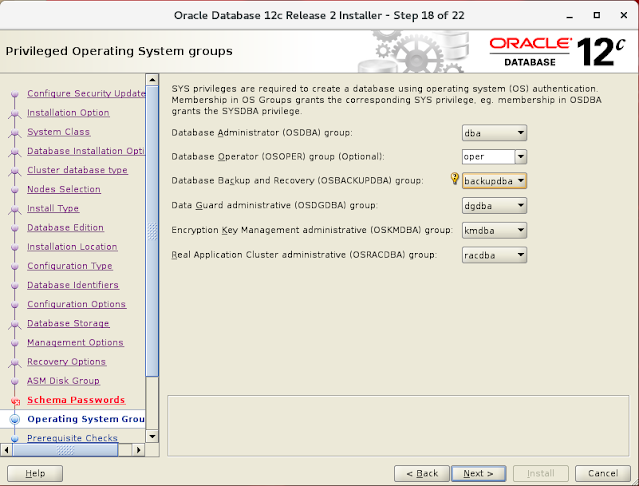

.. Specifiy

database password and also select appropriate groups.

.. Fix

any pre-requisite failed.

Save responsefile

and click on Install to complete the database installation. This will take some time to completed

depending on system resource available

Run

root.sh to complete the installation.

..

Now our database software has been installed and cluster database has been

created. Now time to validate the

cluster and db services.

Check the Status of the RAC

[oracle@rac131 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State

Server State

details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr

ONLINE ONLINE

rac131 STABLE

ONLINE ONLINE rac132 STABLE

ora.ASMNET2LSNR_ASM.lsnr

ONLINE ONLINE

rac131 STABLE

ONLINE ONLINE

rac132 STABLE

ora.CRS_DG.dg

ONLINE

ONLINE rac131 STABLE

ONLINE ONLINE

rac132 STABLE

ora.DATA.dg

ONLINE ONLINE

rac131 STABLE

ONLINE ONLINE

rac132 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE

rac131 STABLE

ONLINE ONLINE

rac132 STABLE

ora.chad

ONLINE ONLINE

rac131 STABLE

ONLINE

ONLINE rac132 STABLE

ora.net1.network

ONLINE ONLINE

rac131 STABLE

ONLINE ONLINE

rac132 STABLE

ora.ons

ONLINE ONLINE

rac131 STABLE

ONLINE ONLINE

rac132 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE

rac131 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE

rac132 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE

rac132 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE

rac132 169.254.48.207

10.10

.10.132 20.20.20.132

,STABLE

ora.asm

1 ONLINE ONLINE

rac131 Started,STABLE

2 ONLINE ONLINE

rac132 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.ccbprod.db

1 ONLINE ONLINE

rac131 Open,HOME=/u01/app/o

racle/product/12.2.0

.1/DB_1,STABLE

2 ONLINE ONLINE

rac132 Open,HOME=/u01/app/o

racle/product/12.2.0

.1/DB_1,STABLE

ora.cvu

1 ONLINE

ONLINE rac132 STABLE

ora.mgmtdb

1 ONLINE ONLINE

rac132 Open,STABLE

ora.qosmserver

1 ONLINE ONLINE

rac132 STABLE

ora.rac131.vip

1 ONLINE

ONLINE rac131 STABLE

ora.rac132.vip

1 ONLINE ONLINE

rac132 STABLE

ora.scan1.vip

1 ONLINE ONLINE

rac131 STABLE

ora.scan2.vip

1 ONLINE ONLINE

rac132 STABLE

ora.scan3.vip

1 ONLINE ONLINE

rac132 STABLE

Database validation

[oracle@rac131 ~]$ srvctl config database -d ccbprod

Database unique name: ccbprod

Database name: ccbprod

Oracle home: /u01/app/oracle/product/12.2.0.1/DB_1

Oracle user: oracle

Spfile:

+DATA/CCBPROD/PARAMETERFILE/spfile.268.1054042269

Password file:

+DATA/CCBPROD/PASSWORD/pwdccbprod.256.1054041797

Domain: ora.com

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools:

Disk Groups: DATA

Mount point paths:

Services:

Type: RAC

Start concurrency:

Stop concurrency:

OSDBA group: dba

OSOPER group: oper

Database instances:

ccbprod1,ccbprod2

Configured nodes: rac131,rac132

CSS critical: no

CPU count: 0

Memory target: 0

Maximum memory: 0

Default network number for database

services:

Database is administrator managed

[oracle@rac131 ~]$ srvctl status database -d ccbprod

Instance ccbprod1 is running on node

rac131

Instance ccbprod2 is running on node

rac132

[oracle@rac131 ~]$

No comments:

Post a Comment